A question that quite a few people probably ask themselves when running a benchmark test is, “how accurate are the results, actually?” This is a thought that is probably triggered especially if the results are bad. In this article we will clear out what makes a benchmark reliable and explain how different techniques and benchmarking approaches impact the accuracy.

How accurate is a benchmark?

When discussing the accuracy of a benchmark tool, a 100% accurate tool is probably impossible to find.

Similarly, as in any scientific research, it would be incorrect to merely look at the results and assume they are 100% accurate without understanding the full context of the research. You need to understand the methodology, context, and conditions of the research to fully comprehend the results. For instance, comparing the results from two different benchmarking tools can be like comparing a n apple lemon to an orange pear, since the methods in which the performance is measured can be completely different.

However, it’s important to remember that benchmarking tools always strive to be as accurate as possible. There are several indicators that can help determine the reliability of a benchmarking tool. Next, we will explore different factors that may impact the accuracy of a benchmark.

Method

One thing to be aware of from the start is that different benchmarking tools might employ different methodologies. When it comes to graphics benchmarks, the actual visuals in the benchmark are a very important aspect, as they can provide a rapid glimpse of ‘what kind of visuals can be achieved’ on that particular GPU.

Therefore, the temptation to include flashier visuals than necessary is quite high. Flashy visuals, of course, make the benchmark look cooler and can be more appealing to users. Hence, benchmark developers need to balance performance and visual creativity to avoid misleading users.

There are two distinct approaches used in graphics benchmarks, both of which will have an impact on how the scene looks when running the benchmark.

Time-based rendering

Time-based rendering means that regardless of how fast or slow the rendering process is, the entire scene must fit within a specific time interval. The total number of frames is divided by the time it takes to complete the scene, resulting in a certain number of Frames Per Second (FPS). Higher FPS values indicate faster scene rendering, which leads to a better benchmark result. Time-based rendering takes as reference the scene duration in optimal conditions.

When using time-based rendering, higher frame rates don’t necessarily result in visible improvements, except for smoother visuals. However, lower frame rates may show inconsistencies due to factors affecting scene complexity, making it difficult to compare results from different runs.

The challenge with time-based rendering lies in the need to complete the scene within a set time frame. Slower rendering may result in skipped frames, leading to inaccurate results. While skipped frames can occur in games when a system struggles with rendering demands, it’s not a desired outcome. The objective of time-based rendering is to assess performance based on consistency and rendering speed.

Frame-based rendering

Another approach is frame-based rendering, which some argue is a more technically correct method compared to time-based rendering since it ensures that every frame is rendered. Basically, this method calculates the performance based on the time needed for each frame to render. This is demonstrated by displaying all intermediate frames in a “filmstrip”-like manner on the side of the screen and showing only every Nth frame in full-screen. This filmstrip display is used because some devices forcibly enable VSync (vertical synchronization), which syncs frame rendering with the monitor’s refresh rate to prevent rendering more frames than the monitor can display, which ensures a smooth graphics experience.

If VSync is active during the benchmark run and the benchmark runs at a higher frame rate than the VSync, the results can be inaccurate because VSync limits the frame rate. Thus, using a filmstrip display ensures every frame is rendered in a controlled and synchronized manner.

While frame-based rendering can be considered more technically accurate, it may not be as visually appealing as time-based rendering. It can run very fast for high frame rates but appear very slow for low frame rates, making it look like you are streaming a movie with a really bad internet connection.

An example from GPUScore: Sacred Path, which uses frame-based rendering.

What method is preferrable?

In some situations, frame-based rendering is preferred over time-based rendering because it consistently produces reproducible results. However, in other cases, such as when evaluating the efficiency of graphics optimization techniques, time-based rendering may provide a better understanding of how these techniques scale against the visuals.

Specified resolution size

The parameters under which the evaluation is performed are also significant indicators of benchmark accuracy. For instance, the resolution at which the benchmark is rendered plays a crucial role when assessing the reliability of the results. Some benchmarks use a fixed resolution, while others may opt for the native resolution of the device, which is mostly applicable in the case of handheld devices.

Setting a fixed, standard resolution for the benchmark provides an objective means of comparison against a single reference point. However, most devices are designed to operate at maximum efficiency at a specific resolution known as the native resolution, which can vary from one device to another. In such cases, performance scales differently. Some devices render in their native resolution may significantly outperform others, but in standard resolution mode, their performance might be much lower. Regardless of whether it is native or standard resolution, it should be stated in the benchmark’s specifications.

The technical implementations

One of the most critical factors to consider when assessing the accuracy of a benchmark is the approach used when implementing specific features in the graphics engine that supports the benchmark.

Typically, most benchmarks on the market rely on a proprietary graphics engine or renderer to execute the benchmark run. The way these features are implemented significantly impacts reliability. While a “one-size-fits-all” approach is often applicable, there are situations where certain approaches may favor specific devices or brands, while others may be less favorable. Although many users may not pay attention to this detail, the choice of renderer used in the benchmark can make a substantial difference.

The complexity of the features covered by a benchmark tool also affects its accuracy. For instance, in the case of ray tracing, you can choose to limit the benchmark to rendering ray traced shadows only, or you can include more ray traced features such as shadows, reflections, global illumination, ambient occlusion, and so on. Clearly specifying these technical implementations in the benchmark’s settings is crucial, as the performance balance varies between these examples.

Device response

Another very important aspect to consider, is how the device responds during benchmark runs.

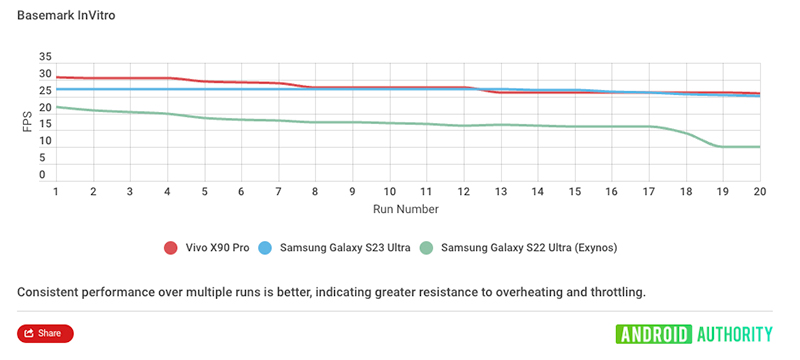

Sustained load over an extended period of time can lead to significant device heating. In such situations, the device’s performance may fluctuate considerably. However, when a device heats up during a benchmark run, it simulates its behavior under stress conditions, such as in high-end games, and should not be overlooked. In terms of hardware, performance often drops abruptly after some time and then stabilizes at a quasi-constant level.

Unfortunately, most benchmarks available on the market do not give enough time for the device to reach a constant level of performance. This limitation arises from practical reasons: running the benchmark continuously for the necessary duration is often impractical, as it would take a considerable amount of time.

Here’s an example from Andoid Authority, where they did 20 consecutive runs of GPUScore:In Vitro to find a constant performance level.

Option to customize the benchmarks settings

Similar devices from different brands may have different approaches to the implementations on the rendering engine that aren’t equal, resulting in different device behaviors.

In such cases, the most effective approach to assess performance is to incorporate as many optimizations or adaptations as possible, mirroring the way games optimize their performance for various devices. Major game titles achieve this by customizing certain features to ensure they run optimally on a broad range of branded devices.

Finding a standard benchmarking tool that can accurately evaluate performances across different brands is quite difficult to obtain. Therefore, it is beneficial for benchmarking tools to increase the granularity of feature optimizations, optimizing both the workload and the backend implementations as much as possible. This approach would increase both complexity and accuracy. Oppositely, another approach would be to limit them to an extremely basic level, leaving no room for deviation. By doing so, the results would be quite accurate but not comprehensive enough.

To some extent, the chosen approach reflects how the final score is determined. In most cases, the final score results from a straightforward mathematical operation, proportional to the average time needed by a frame to render. It is possible that a more complex calculation could also enhance the accuracy of the tool.

Repeatability of results

Finally, the most crucial attribute of a benchmark tool is its repeatability. If the scores are repeatable, the benchmarking tool can in general be considered as accurate. However, it’s important to note that there is always an error margin to be aware of. This margin of error depends on various factors and conditions, such as temperature or background processes.

If a benchmark developer were to consider all the factors listed in this article, one could argue that the developed tool would be quite accurate. Nevertheless, without repeatability of results, the tool is compromised. The smaller the error margin between consecutive tests, the more accurate the benchmark is.

Can a benchmark be cheated?

As with almost everything in the digital environment, benchmarks can also be manipulated. For example, one common method is app throttling, which is when background applications are hampered, to allow the device to allocate most of its resources to the benchmarking tool. Since the conditions do not reflect normal usage, the benchmark results do not represent the actual performance. Another example is running the benchmark on a cool device, which often yields better scores compared to running it on a hot device. It is also possible to manipulate benchmarks by boosting the GPU or CPU as the benchmark is running.

These practices are well-documented, and there has been extensive discussion on this topic. Unfortunately, benchmark tools cannot completely prevent such manipulation. However, by improving result accuracy and methodology, benchmarks may be better equipped to detect suspicious behavior.

Accuracy depends on several factors

The reliability of a benchmark tool depends on a combination of several factors, not just one or two conditions. Repeatability and reproducibility are among the most crucial aspects, but the methodology, feature granularity, consistency, and approach are also worth considering. Examining these details can provide end-users with a clearer understanding of how reliable a benchmark tool is.